blogs

blogs

So, what’s a LEM and why do I want one?

It seems you can’t go anywhere these days without someone talking or writing about Large Language Models or LLMs, the technology that is the backbone of products such as ChatGPT4 or Dall-E. However, whilst amazing technology, LLMs in the main, ‘simply’ attempt to mimic human behaviour; to produce text, stories, images and videos – and with the output often being subjective.

Enter the world of engineering, and the laws of physics and calculus that govern what happens within: much less subjectivity and much more fact. Because of these rules, Large Engineering Models (LEMs), and the sets of data they are trained on, are estimated to be smaller, more affordable, and arguably much more useful when it comes to critical engineering issues such as the drive to decarbonisation.

So, what is a LEM?

Large Engineering Models are a specialised subset of machine learning models, designed to tackle complex engineering and physics challenges. Unlike traditional machine learning models that focus on general tasks like image recognition and generation or language processing, LEMs are tailored to simulate, predict, and optimise engineering systems and physical phenomena. These models are built using datasets comprising scientific principles, simulation data, and often real-world measurements. By leveraging advanced algorithms and computational power, LEMs can understand and solve intricate problems that would typically require extensive and lengthy manual computation and expertise.

One of the standout features of LEMs is their domain-specific knowledge, which allows them to provide accurate and relevant solutions within specific engineering and physics fields. Additionally, LEMs are scalable, making them suitable for large-scale problems in industries such as aerospace, automotive, energy, and manufacturing.

Their predictive capabilities enable the simulation and forecasting of outcomes, which helps design systems, anticipate potential issues, and optimise performance. The outputs of LEMs can also be verified in a much more objective way than outputs of LLMs. If a LLM outputs, say, two poems, it may be hard to judge which one is better because language and art is subjective and different people will likely have different opinions and perceptions. Since an output of a LEM is engineering quantities and scientific prediction, they can be verified either against experimental data or high-precision numerical simulations. This makes training LEMs easier and gives us a way to check that LEM outputs are correct.

Both LEMs and LLMs are built on the same underlying principles and technology; that of a neural network (or if you are a computer scientist, a complex transformer-based neural network). However, there are also differences between the two; probably the biggest being the size of the datasets needed to train each, and therefore the costs incurred in processing them. We expect LEMs to be a lot smaller and cheaper, but in many ways much more valuable societally and financially.

Why do I want one?

The short answer is speed. The speed of being able to discover near optimal designs. Traditional engineering computations can be time-consuming and resource-intensive, but LEMs can dramatically reduce the time required to analyse and solve problems, thereby accelerating the design and development process. The precision of LEMs ensures that solutions are reliable, which reduces the risk of errors that can lead to costly revisions or failures in real-world applications. Moreover, by optimising designs and processes, LEMs help in reducing material waste, improving energy efficiency, and lowering production costs.

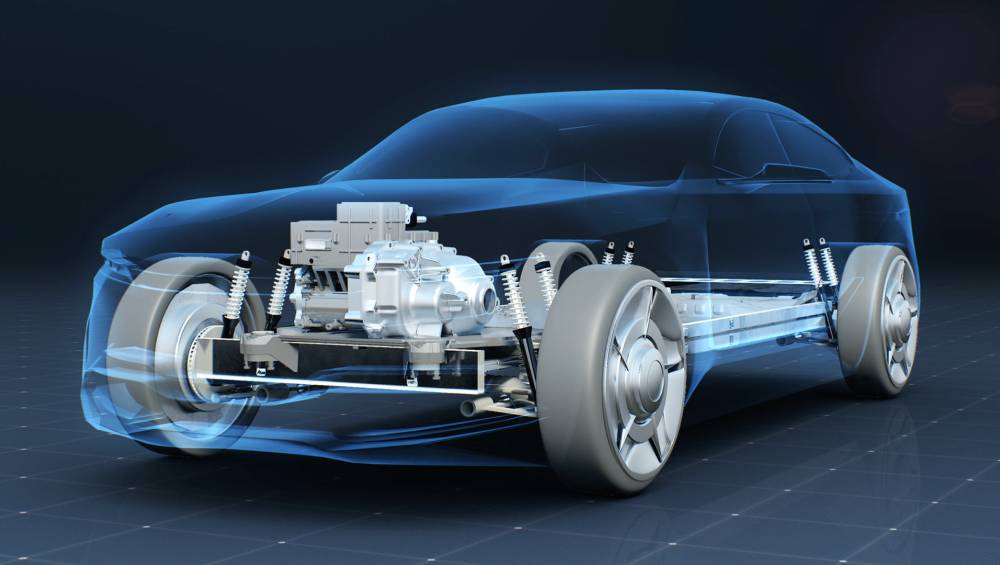

Currently, if you want to look at, say, a new motor design, you first define the criteria you want to improve; a power output of X, a weight of Y, or Z% less grams of permanent magnets could be examples and then you need to optimise your motor to fulfil those requirements. Whilst industry can already do this today, it needs to use templates - a compromise between how your new design might look versus a limited number of things you can change (parameters) with which the current design optimisation tools to cope.

Bear in mind that every time you increase the number of parameters, the number of calculations you need to compute increases exponentially.

Here’s a simple example: if I just constrain myself to looking at the design of the motor in a small passenger car, but the only things I can change are the length and the radius of the motor, and that there are 40 different options for length and 10 possible widths for the radius, then I can very quickly find the optimal solution for my application by simply looking at just 400 permutations (40 x 10). In reality, motor design is vastly more complex, and even in this simple example, we quickly end up with an equation that looks more like 40 (length) x 10 (radius) x 5 (magnet configuration) x 10 (stator configurations) = 20,000 permutations. And even this is still ridiculously simplified.

However, what that simplified example clearly shows is that by adding just two more parameters, the number of computations you need to make increases by a factor of 50! And, in the real world, the number of template-free parameters we need to consider isn’t just 5 or 10, but much more likely to be several hundred or even a few thousand with the obvious exponential growth in the compute power required. Current industry standard tools simply cannot cope with these degrees of freedom.

Advanced ML and data-driven approaches are needed to tackle this huge complexity of the design space. A LEM is a system that has been trained on large quantities of engineering and scientific data and builds some “understanding” of the laws of electromagnetics, thermodynamics and mechanicals constraints. LEMs can use that knowledge to quickly and precisely predict what will happen when a design is modified in some way.

Here’s an example to help explain. Imagine a talented orchestra, where each musician is highly skilled in their respective instrument. Together, they can perform beautiful symphonies. However, coordinating everyone to play in perfect harmony takes a lot of effort, practice, and time, guided by a skilled conductor who knows the score intimately and can make real-time adjustments to ensure the performance is flawless.

Compare this to traditional engineering methods, where each engineer is skilled in their specialised field. Together, they can solve complex problems and they have some useful tools to help them along the way. However, coordinating everyone to think about genuine system-level engineering that optimises performance is, and remains, a significant challenge.

Now, envision the LEM as the conductor of this orchestra. This conductor isn't just any maestro; it is powered by AI and has learnt from analysing thousands of performances, trained on vast amounts of engineering and scientific data. It understands the intricacies of each instrument and how they should interact to create the perfect harmony.

As with the conductor, the LEM can instantly interpret the score (the engineering problem), predict how each section of the orchestra should play, and make precise adjustments to ensure the best performance. It can see the entire piece from start to finish and knows the optimal way to achieve the desired outcome, whether it’s adjusting the tempo, balancing the sections, or enhancing specific parts of the performance. Or, put another way, system-level engineering.

Just as a conductor with perfect knowledge and insight can lead an orchestra to perform complex symphonies with efficiency and precision, LEMs guide engineering projects by processing and analysing large datasets, simulating potential outcomes, and providing optimal solutions quickly and accurately. This advanced conductor, the LEM, ensures that all elements of the engineering project work together seamlessly, achieving results that are both innovative, highly efficient and delivered at incredible speed.

LEMs bring a level of strategic oversight, precision, and innovation that transforms the engineering process, much like how a great conductor elevates an orchestra’s performance to unprecedented levels.

Conclusion

A LEM delivers accurate and innovative results delivered in a fraction of the time of current approaches. And once you have built your LEM, you have both speed and you no longer need enormous amounts of compute power to find optimal engineering designs. LEMs will, in short, deliver the future of engineering solutions. For electric motor powertrain design, they will enable true system-level optimisation across all power train components and give us a tool to discover innovative and unintuitive motor solutions that will not only create significant value, but also help our move to decarbonising transport.